Building Impact Radius #1: From Problem to Foundation

The problem of data validation

When you make changes in a data project, you’re rarely worried about the code itself. Instead you’re worried about “what do I actually need to validate to ensure my data is right?”

- Does the finance team have the metric they asked for?

- Does the fixed churn rate metric finally make sense to the marketing team?

- While improving the code quality, were numbers modified that shouldn’t be?

At Recce, we’re obsessed with making sure your data isn’t just accurate, but contextually correct, which is why we built our new Impact Radius feature. In this blog post I’ll talk about the reasons why we built it and issues we had along the way.

Users want data diffing, but worry about compute cost

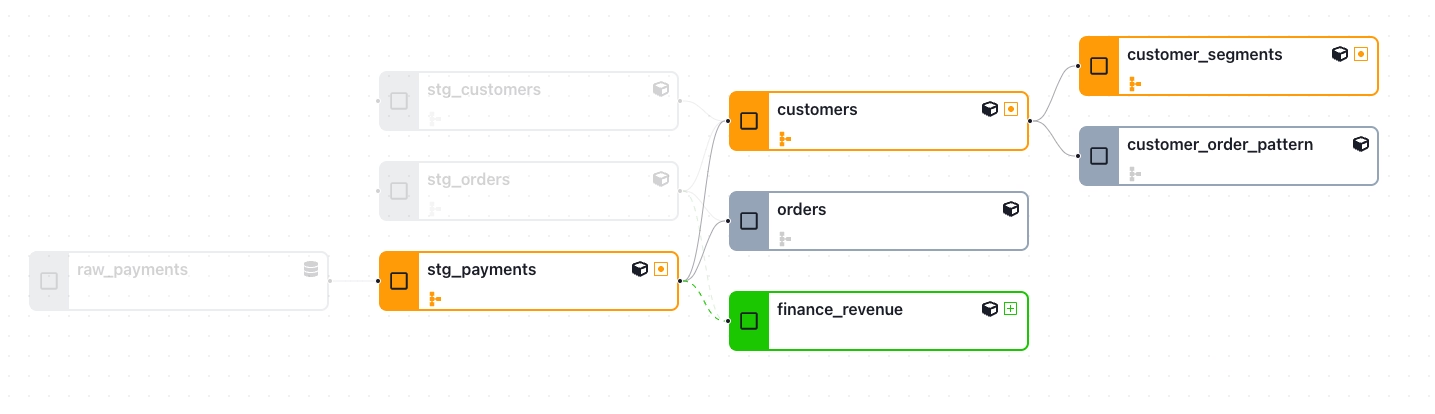

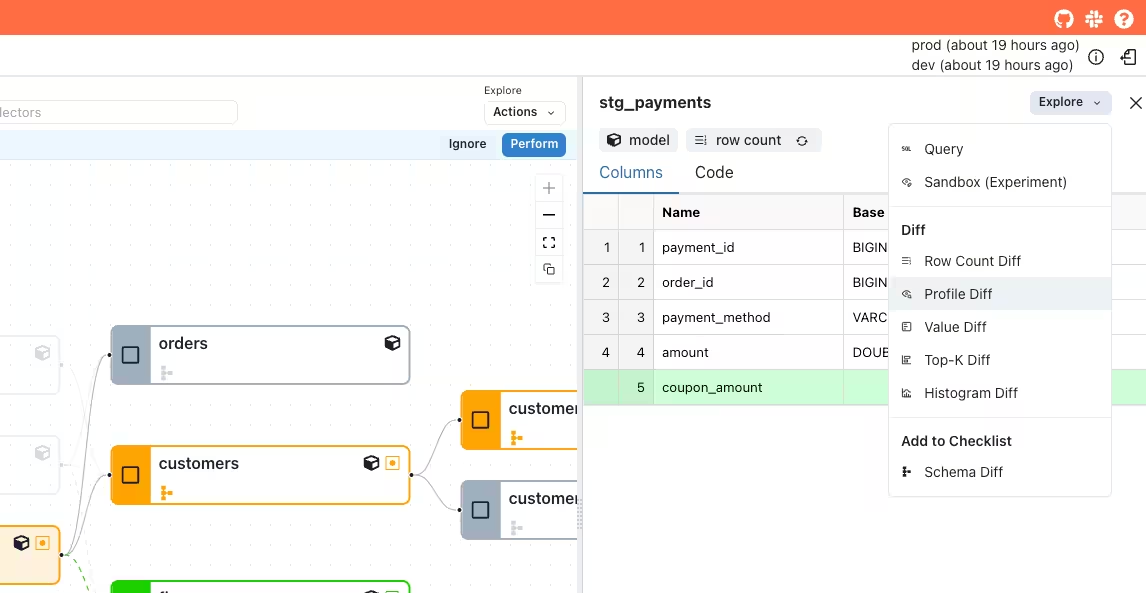

In our user interviews we know that analytics engineering teams struggle with data validation and think data diffs could be helpful. We see this when we demo Recce’s lineage diff to people. The reaction is consistently: “Wow 😮 finally, I can see what my change actually affects!” As you can see in the screenshot, you can see exactly what changed and whether that change is a modification, addition, or deletion of data in a given model.

However, the “wow” moment quickly fades into practical concerns:

- Question 1: “How many models should I validate? All the ones shown here?”

Even with a clear view of changed models, users still felt overwhelmed by the validation scope. - Question 2: “What’s the cost when I query the warehouse?”

Since our data diffs are one-click easy, users worried about accidentally running expensive queries.

These questions revealed something crucial: users want precision, not just visibility. Visibility is nice, but precision helps save time and compute costs.

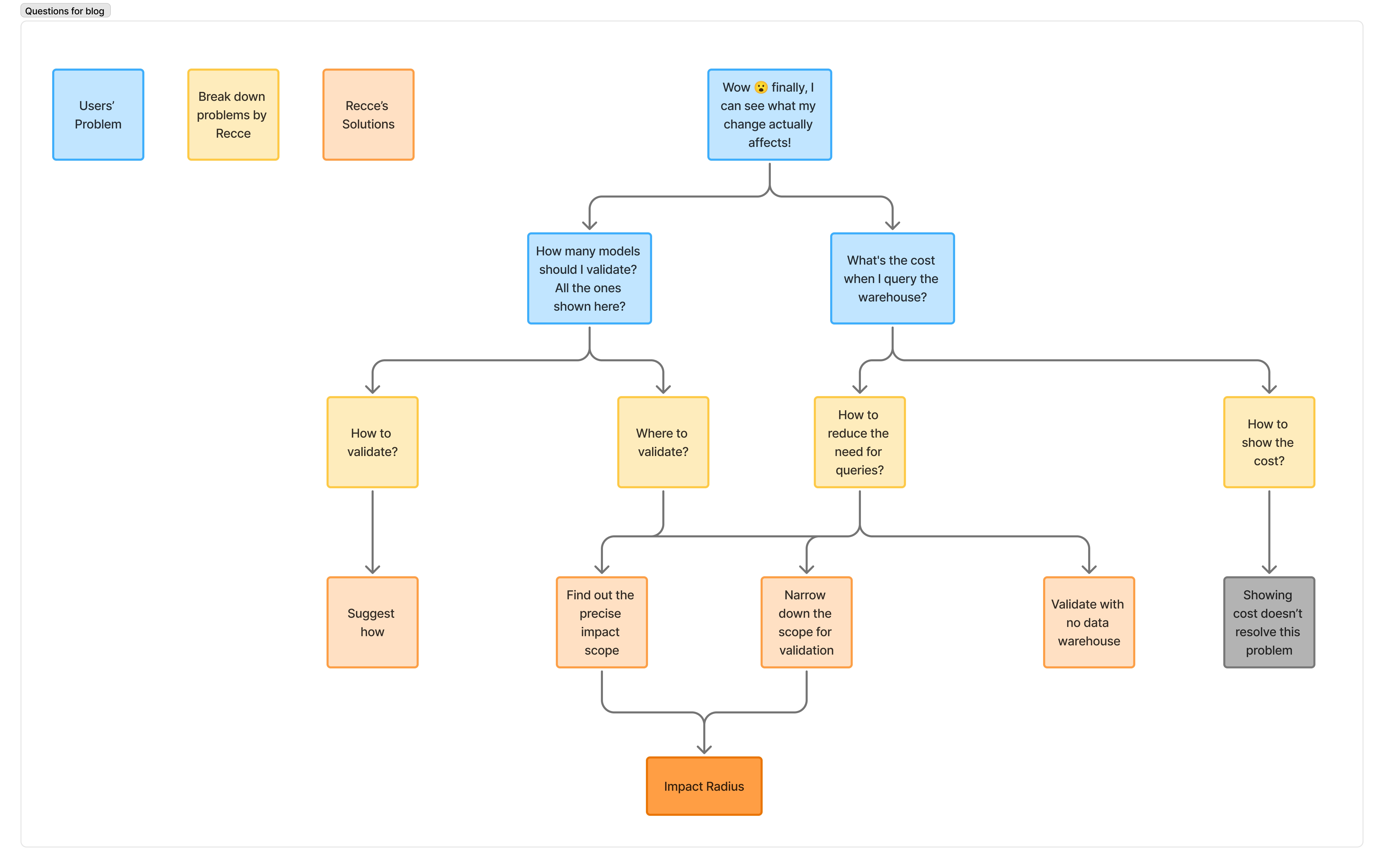

Brainstorming what is the real problem and potential solutions

These two questions were killing our adoption. Users loved seeing what changed, but were hesitant due to validation uncertainty and cost fears.

Here’s the thing: we’re a control plane tool. The actual queries happen in users warehouse, not ours. We don’t know our users’ compute costs, and showing cost information wouldn’t solve the greater problem.

The real insight: we needed to reduce the need for queries in the first place.

That lead us to think, we should we narrow down the scope of what models require validation. Or we can enable validation without data warehouse querying? After discussion, we went with narrowing down the scope.

We broke this into two parts:

- Where to validate? - What’s actually impacted vs. just downstream?

- How to validate? - What’s the right validation strategy?

Instead of explaining this abstractly, let me show you how we mapped out the problem:

This flowchart became our north star. Every user question and pain point led us to the same conclusion: We needed to build something that answered “where to validate?” with surgical precision. Alongside our other features, we’re building a process that helps you validate what matters, at scale.

That something became Impact Radius.

Under the hood of Impact Radius

Once we had clarity on the problem, we realized we’d actually been building toward the solution all along. The lineage diff, breaking change analysis, and column-level lineage we’d already built? Those were the foundation pieces.

We just needed to connect them intelligently.

Version one: Basic impact detection

Looking back, our lineage diff was actually Impact Radius version one, we just didn’t call it that yet.

The approach was straightforward:

- Use dbt’s

state:modified+selector to detect all new nodes and changes to existing nodes - Create a lineage diff showing:

- Modified models (orange)

- New models (green)

- Deleted models (red)

- Show all downstream models of the modified ones

Example in interactive demo: https://pr46.demo.datarecce.io/#!/lineage

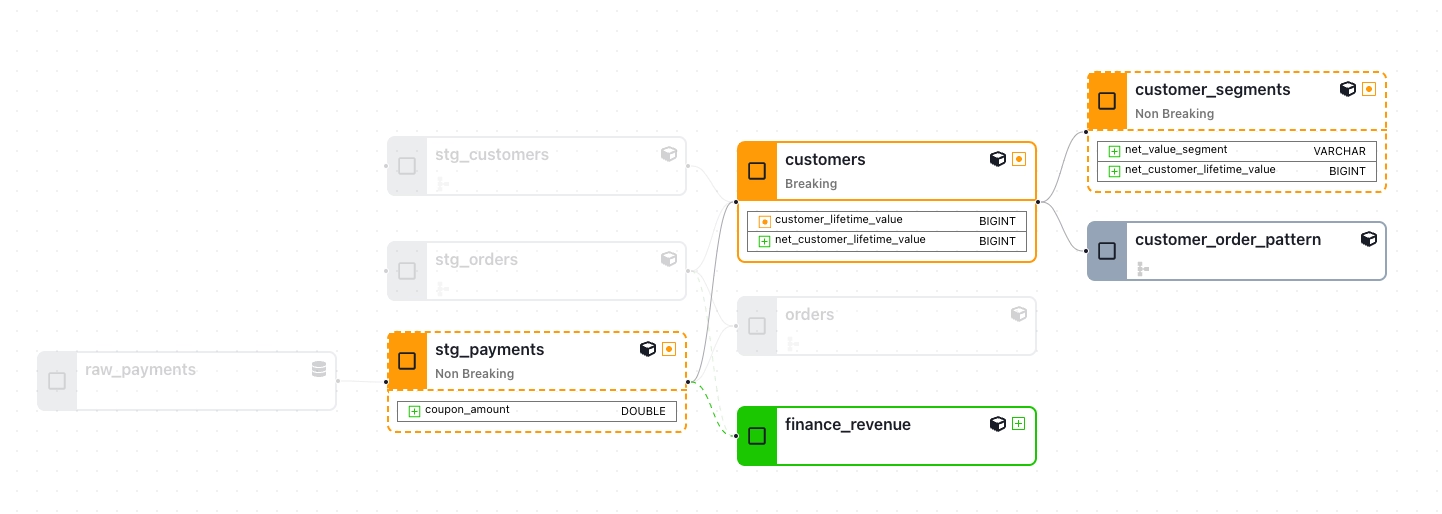

In this demo, we can see

- 4 modified models total:

- 3 modified:

stg_payments,customers,customer_segments - 1 new:

finance_revenue

- 3 modified:

- 5 downstream models potentially affected:

stg_paymentshave 5 downstream modelscustomershas 2 downstream modelscustomer_segmentsandfinance_revenuehave no downstream models

The results were promising but limited.

- Clear visibility into what changed

- Basic impact scope (everything downstream

- Still too many models to validate

Change a early upstream models like stg_payments, and you’re back to test everything downstream.

Version two: Breaking change analysis

That’s when we realized: not all changes are equal. Some break downstream data, others don’t.

With our breaking change analysis feature, we can potentially reduce validation in many cases.

Using SQLglot (a no-dependency SQL parser, transpiler, optimizer, and engine) semantic analysis of the modified models, we can determine if they introduce breaking changes, which tells us if downstream models are actually impacted.

- Breaking change: Impacts downstream data

- Non-breaking change: No impact on downstream data

This was our key insight: separate the modifications from the impacts. This flipped our entire approach. Instead of focusing directly on the downstream models, we analyze the modified models and their dependencies, to then better define the impact on downstream models.

Example of an orders model like this:

-- Before

SELECT customer_id, order_date, total_amount

FROM raw_orders

-- After A: Breaking change

SELECT customer_id, order_date, total_amount

FROM raw_orders

WHERE order_date >= '2024-01-01' -- New filter

-- After B: Non-breaking change

SELECT customer_id, order_date, total_amount, created_at

FROM raw_orders

Example A adds a WHERE clause that changes the row set. This semantic understanding lets us classify it as breaking: all downstream models will have different data.

Example B adds a new column while existing columns remain unchanged. This is non-breaking because downstream models using the existing columns won’t be affected.

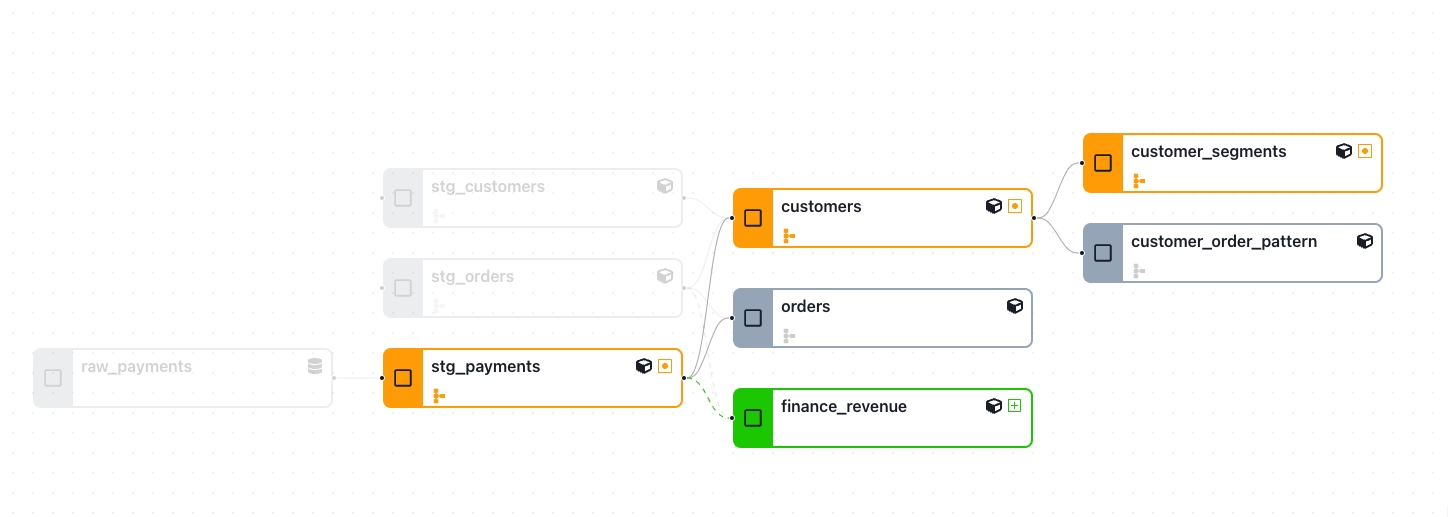

Applied to our demo: https://pr46.demo.datarecce.io/#!/lineage

The result shows the power of this approach:

- In the three modified models,

stg_paymentsandcustomer_segmentsintroduce non-breaking changes. Meaning even though there are five downstream models, zero we need to validate. - Only the

customersintroduces a breaking change. Meaning we need to validate the two downstream models.

The remaining problem: when a breaking changes in early upstream models, our users still need to validate all downstream models and worry about the query costs.

Version 2 validated our direction, but we knew there was more to build . We built the foundation pieces (breaking change analysis and column-level lineage), and we could see how to connect them for something much more powerful later.

The something becomes Impact Radius version 3.

Impact Radius v3 is Live

After months of research and engineering, Impact Radius v3 launched in Recce v1.10.0.

Try it now:

- Upgrade:

pip install recce -U - Or explore our interactive demos to experience column-level impact precision

We’re excited (and nervous) to see how teams use precise validation scope in practice.

Next article: version three

This journey from “validate everything” to “validate exactly what matters” took us months of research, dead ends, and breakthroughs. In our next article, we’ll share how we build the version 3: the inspiration, the dependency types and engineering decisions that make this precision possible.

Are you facing the same validation challenges? Follow along as we document breakthroughs that could change how your team thinks about data validation.