Basic Data Validation

See how schema changes affect downstream models and data consumers

Summary

The customer lifetime value (CLV) calculation included incomplete orders, shipped, pending, even returned items, instead of only completed transactions. These inflated CLV numbers were quietly driving bad business decisions across teams.

When the data team discovered the issue, they faced a classic problem: fixing data that was technically correct, but not contextually right, which breaks business processes. The corrected CLV calculation dropped the average customer value from $2,759 to $1,872, affecting 98% of customers. Even worse, 26% of customers would shift between value segments, potentially disrupting marketing campaigns and sales strategies.

Instead of deploying the fix and dealing with confused stakeholders later, the data team used the situation as an opportunity to validate their approach. They showed exactly which customers would be affected, how segmentation thresholds might need adjustment, and gave business teams time to prepare for the new reality.

The result? A technically and contextually correct fix that stakeholders understood and trusted.

Problems

Broken trust: When stakeholders learn their core business metrics have been wrong, they question both the new numbers and the team behind them.

-

Hidden data error: CLV calculations included incomplete orders for months, silently inflating customer values and misleading business decisions.

-

Massive downstream impact: 98% of customers affected, 26% shifting value segments—changes this big break campaigns, forecasts, and strategies.

-

Validation complexity: Proving a 32% drop in average CLV is "correct" requires showing stakeholders exactly which customers changed and why.

Solutions

-

Visibility: Make what changed instantly clear

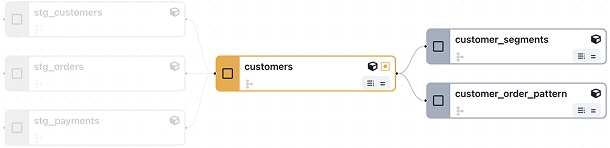

Column-level navigation enabled the data team to trace the upstream error into the downstream impacted metrics. -

Verifiability: Prepare evidence and bring stakeholders into the loop

Profile diff, value diff, and custom queries helped business teams see how data team examined the changes and made sure the fix was correct. -

Velocity: Build repeatable processes for sustainable speed

By bringing stakeholders into the validation process, the team established a collaborative framework where future data issues can be fixed together, reducing resistance and accelerating resolution.

Trust, Verify, Ship

Cut dbt review time by 90% and ship accurate data fast